I find myself in a place where I can do some really interesting things in the context of music and design. A lot of the research involving the design of a sound is recent and tends to be from the last 5 — 10 years. I’ve been doing this since the end of 2018. This process involves me rediscovering things I know intuitively about music, but understanding it from a machines perspective helps me understand it deeper and in a different way. My goal is to challenge some established ideas of current music composition.

This chapter is a reflection of this journey so far. Fundamentally the theme of this journey is about bridging the gap between humans and machines in how we make music and understand music. Within each phase I will go through a summarised explanation on what constitutes each branch (sound, machine and mind).

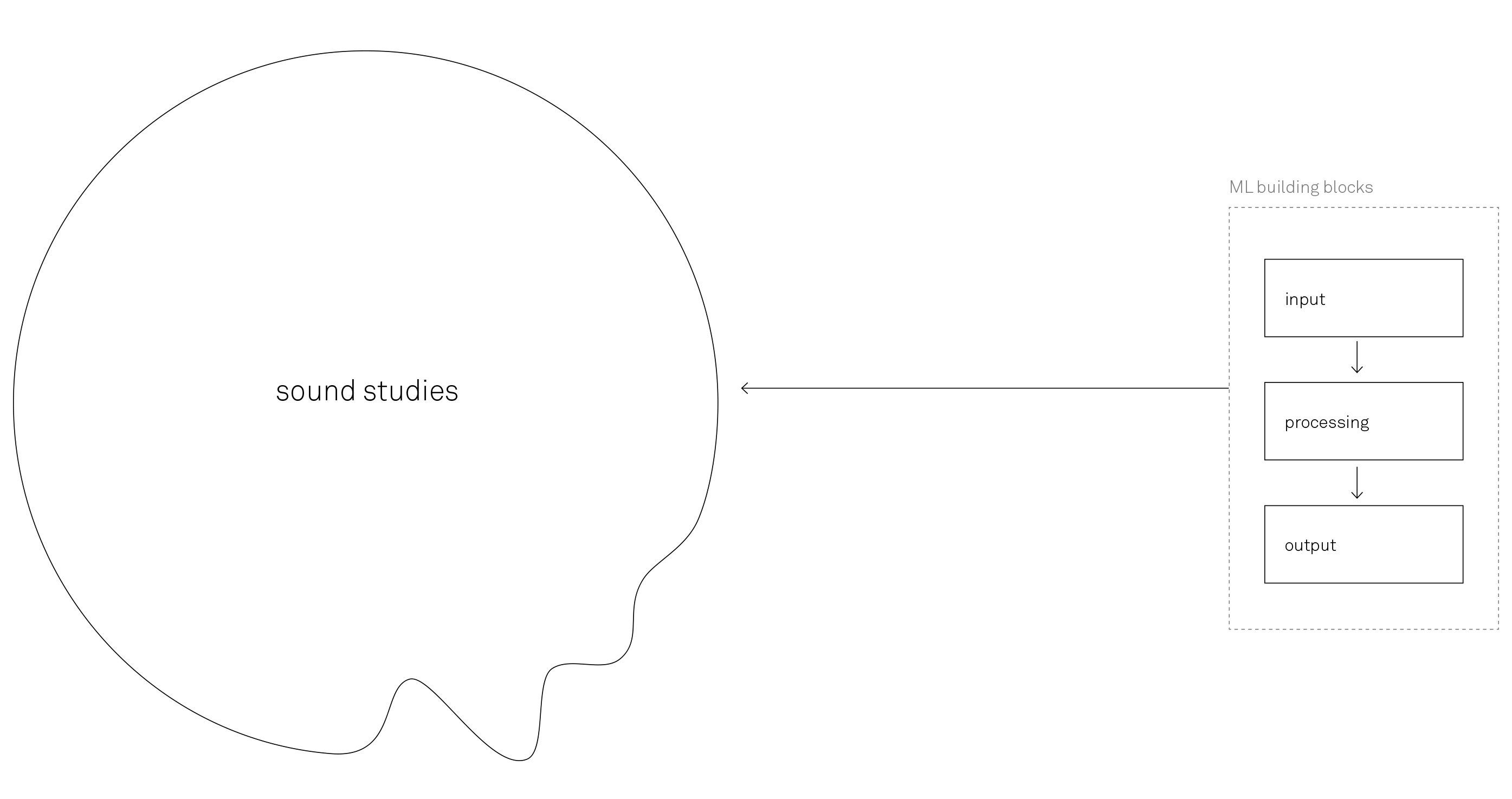

The first step was to take the building blocks of machine learning and apply them to a fitting format for further [sound studies] .

What is the relevance and significance of Music?

Everyone in the world understands (or can distinguish) more or less the difference between a lullaby, march song or a hymn. There has to be something that holds it all together and brings everyone together irrespectible of where they are from and how they thought about this. Music is both an art and a science, and music and science are closely related. Both use mathematical principles and logic, blended with creative thinking and inspiration to arrive at conclusions that are both enlightening and inspirational.

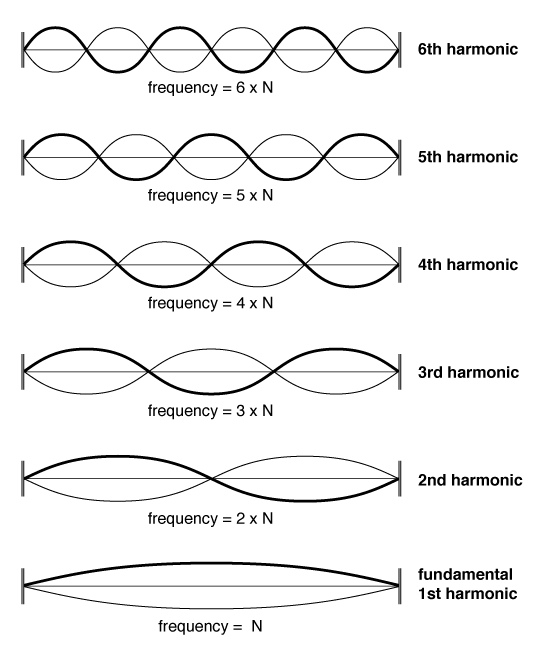

i) [Science] There are a number of scientific theories that try to explain music. This is a clear indication that music is as complex and varied as any scientific principle or theory. There is the scientific process that help us understand the physics of sound better as well as the psychological implications (a trigger to a reaction). Ex. overtones, harmonics etc.

[Science]

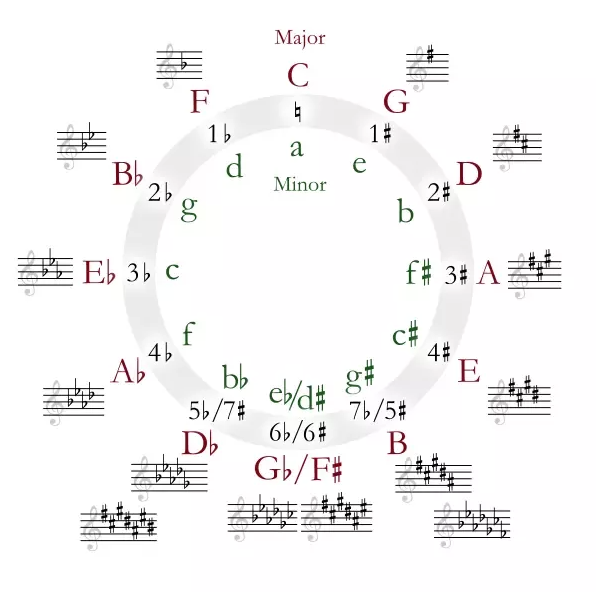

ii) [Maths] There is a certain mathematical aspect of this what I want to uncover. All the musical scales have a lot of mathematical symmetry to it. Both Science and Music use “formulas” and “theories” to understand relationships of fundamental structures.

[Maths]

iii) [Creativity] There is also music as an artform, where the melody or music is inseparable from the person that creates it. Every creative act leaves a message and therefore a distinct “footprint”.

[Creativity]

Process Explanation

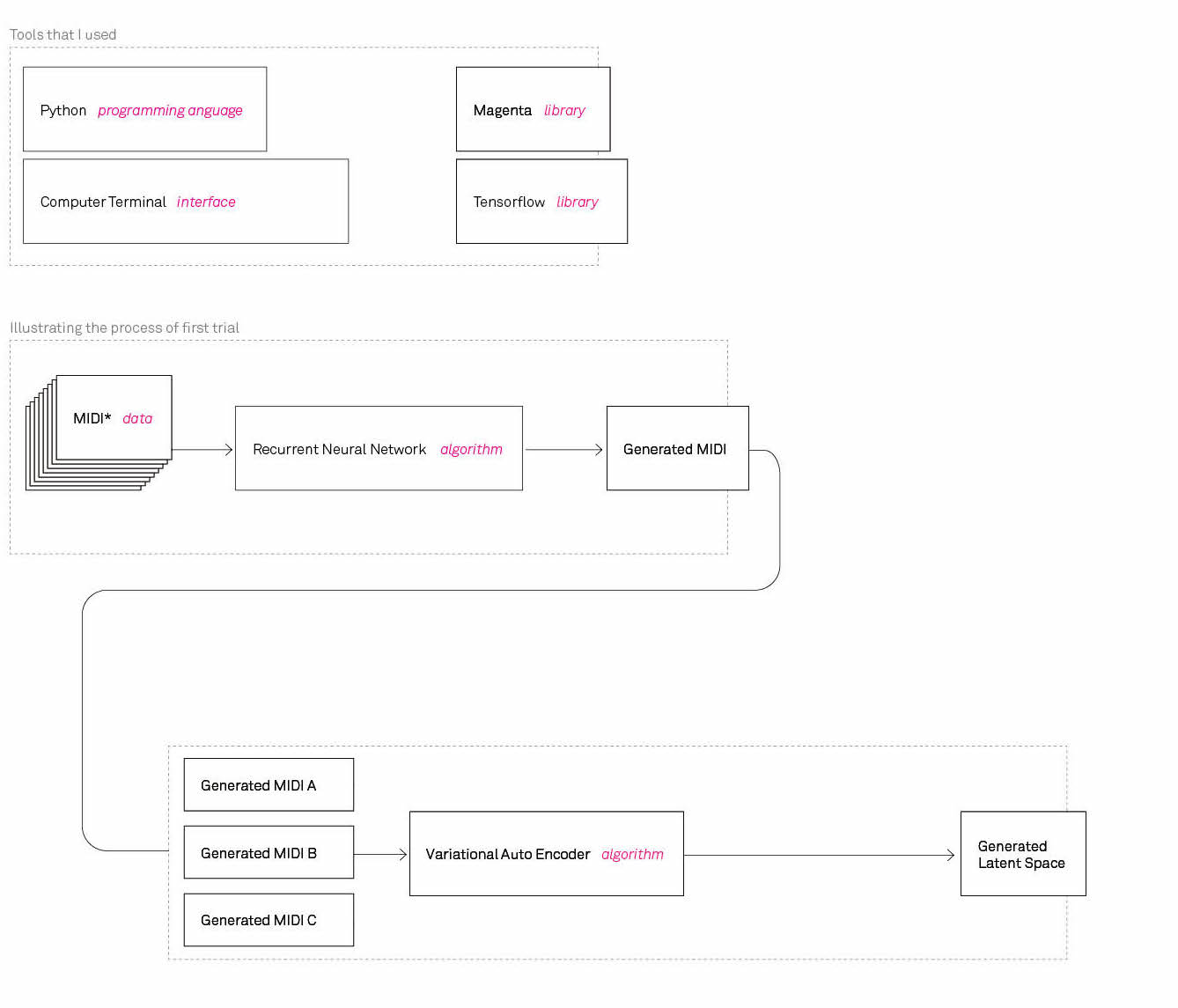

After having spent a considerable amount to understand the theory behind both musical structures and musicology, I tried to understand which library I can use to train MIDI sound files for specific algorithms. [Magenta] is distributed as an open source Python library, powered by [TensorFlow] . This library includes utilities for manipulating source data (primarily music and images), using this data to train machine learning models, and finally generating new content from these models. Below I introduce two machine learning approaches for different purposes. On the right I attempted to visualize the process of my two approaches.

Trial 1 — Sound to Sound Generation using RNN The first model, a Recurrent Neural Network, applies language modeling to drum track generation using an LSTM. Unlike melodies, drum tracks are polyphonic in the sense that multiple drums can be struck simultaneously.

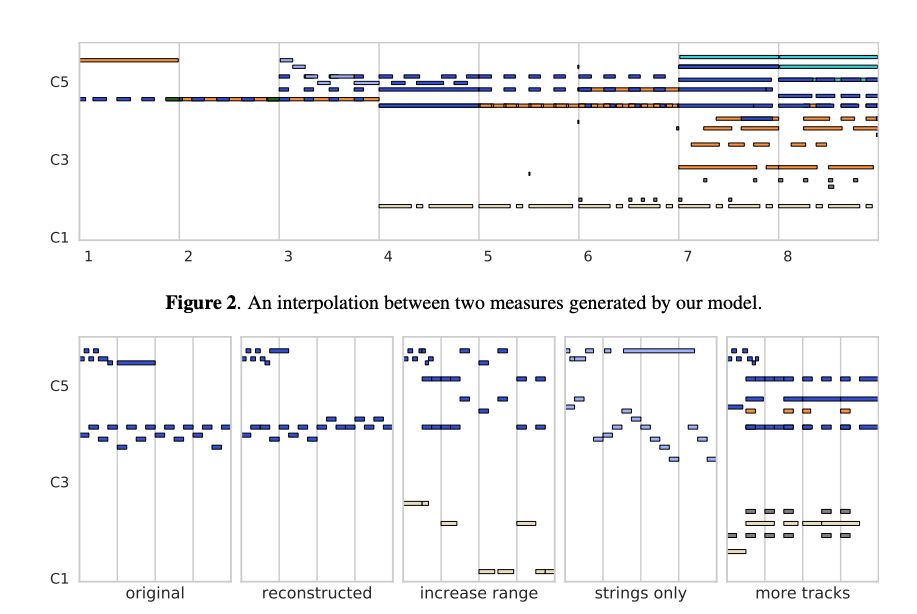

Trial 2 — Generating an interpolation between Sounds or Melodies When a painter creates a work of art, she first blends and explores color options on an artist’s palette before applying them to the canvas. This process is a creative act in its own right and has a profound effect on the final work. Musicians and composers have mostly lacked a similar device for exploring and mixing musical ideas. Here introduce MusicVAE, a machine learning model that enables me to create palettes for blending and exploring musical scores.